LLM basics from scratch

Abstract

The main purpose of this article is to use basic self-attention blocks to build a simple large language model for learning purposes. Due to limitations in model scale and embedding methods, the model built in this article will not be very effective, but this does not affect the ability to learn various basic concepts of language models similar to Transformer through the code provided in this article.

What happens on this page:

- get full code of a basic Large(?) Language Model (data preparation, model architecture, model training and predicting)

- understand the general architecture of transformer with a few illustrations

- understand how self regressive training works

- understand how to load very large text dataset into limited memory (OpenWebTextCorpus)

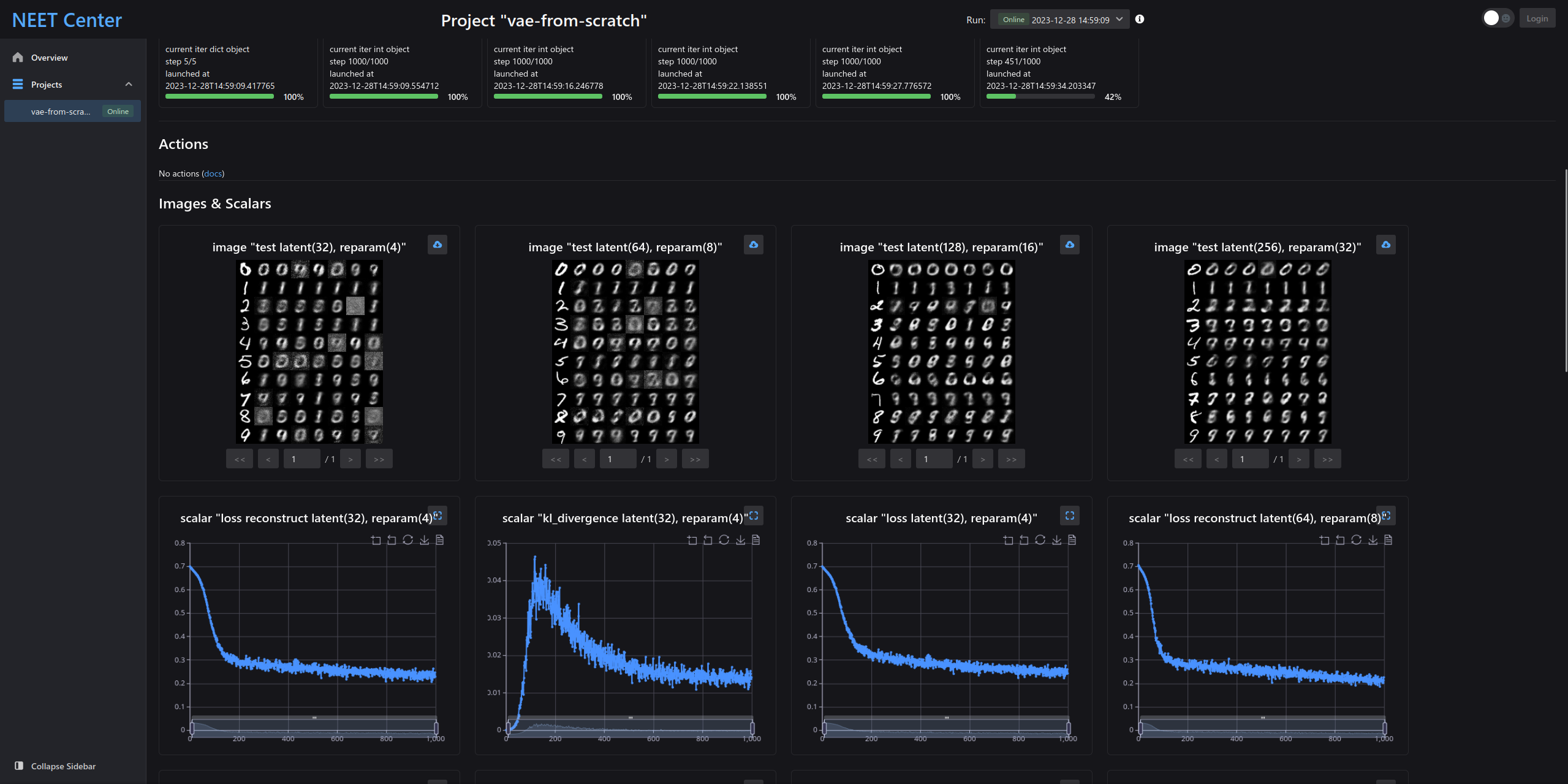

- train and observe the training procedure

- load the trained model into a simple ask-and-answer interactive script

Get full code

Code available at github.com/visualDust/naive-llm-from-scratch

Download the code via git clone before continue.