Amhdal's Law

Speedup of Parallel Execution

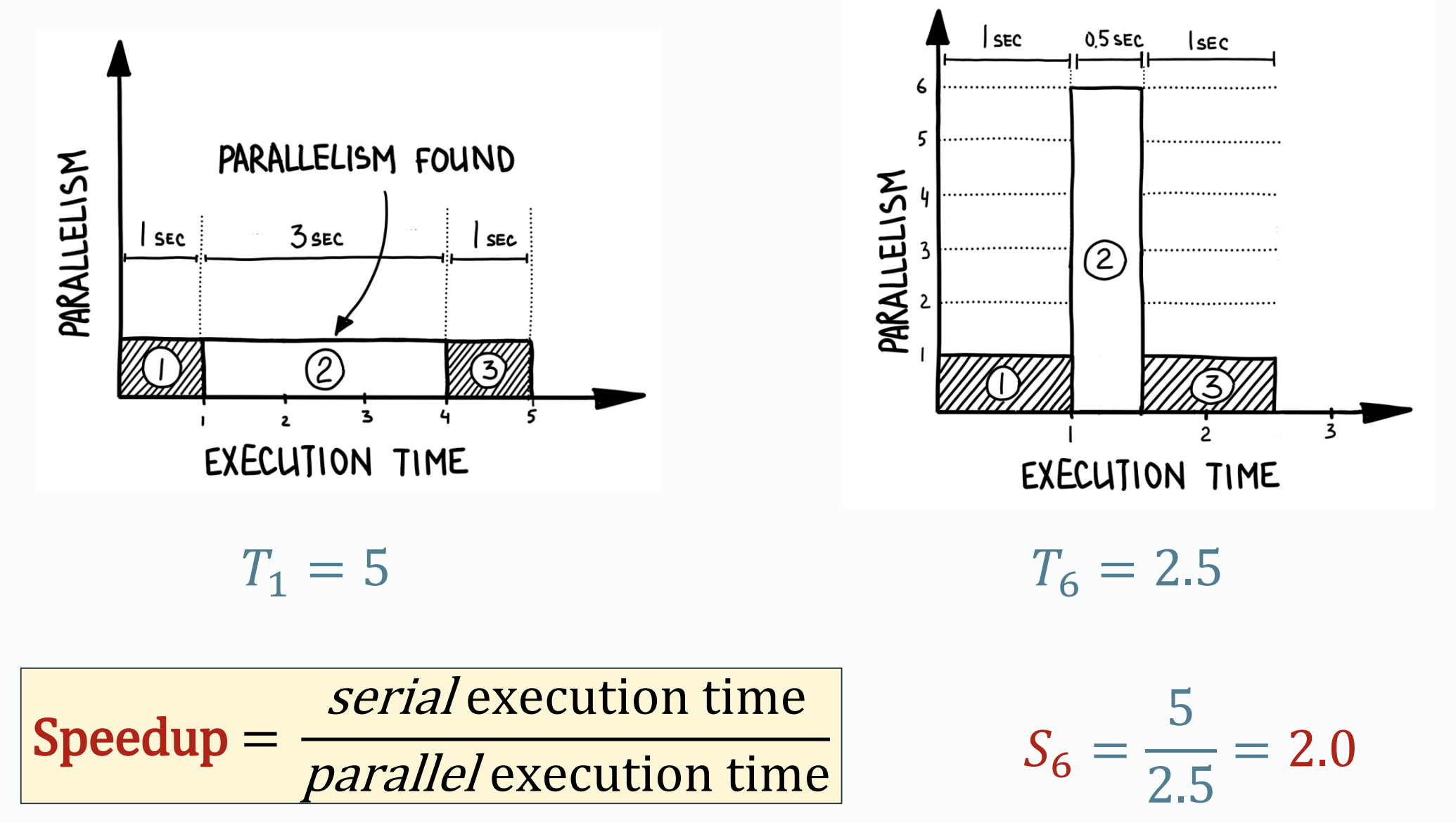

Definition: Speed up Speed up is defined as:

Note that the time of serial execution should be the time for serial execution of best serial algorithm because bad programs often have good speedup.

Suppose that a program is composed of a serial phase and a parallel phase, the whole program runs for time unit, the serial phase runs for time , and the parallel phase for time .

Amdahl's Law

Amdahl's law Regardless how many processors are used, the execution time of the program will be at least , and the speed up will be no more than .

Again, suppose a machine with processors, the serial phase takes time and the parallel phase takes time if run on a single processor. Therefore:

- For the parallel phase, it takes on this machine.

- For the serial phase, it only runs on a single processor and it takes time . Let be the ratio of serial time to total execution time:

We can know that . The conclusion here is that if you have unlimited number of processors, the time consumption of a parallel program could be theoretically .

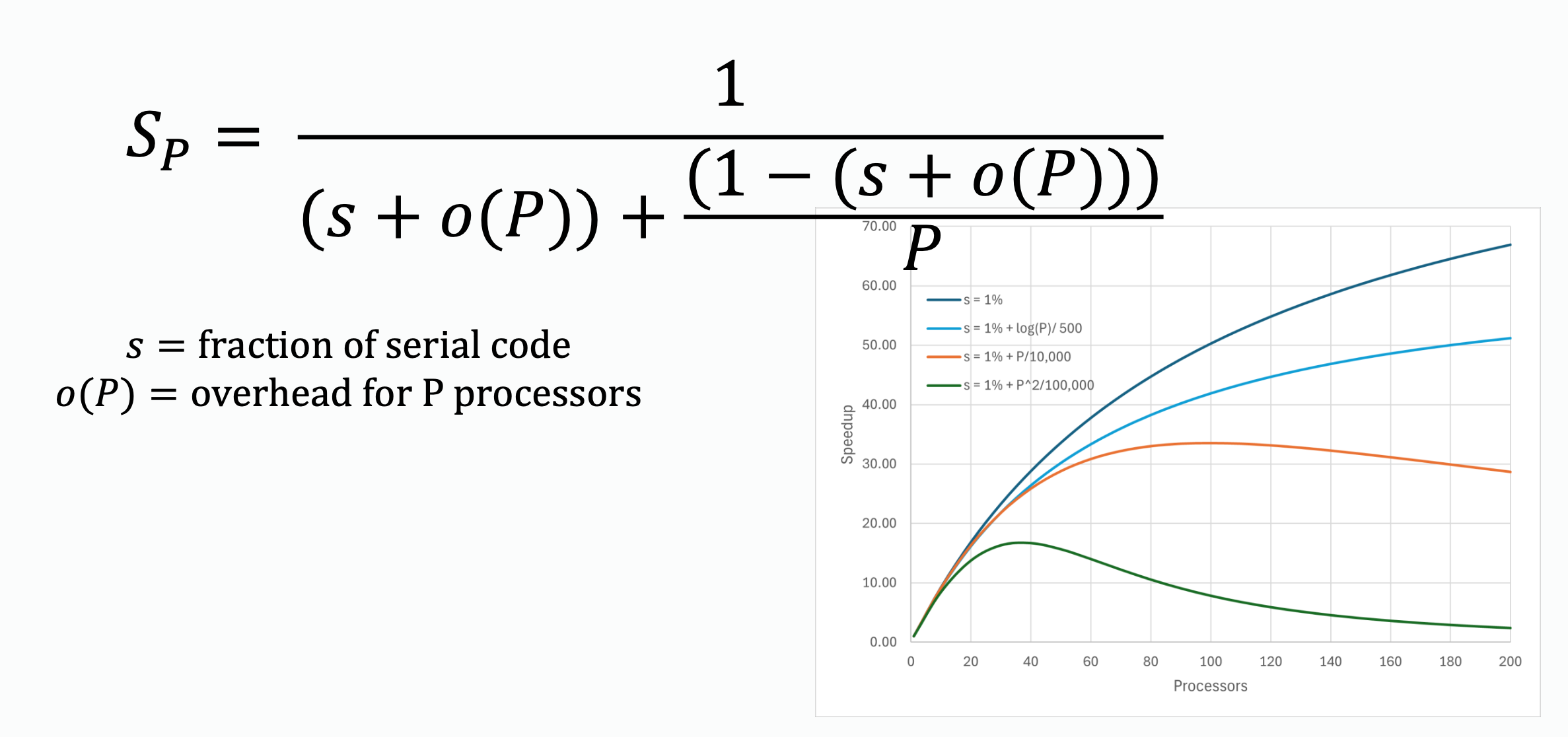

Adding cores(processors) causes overheads, as the processors need to communicate to switch information with each other, or they need to do some synchronization. Therefore, the actual calculation on speed up should consider the overhead term caused by using processors.

The overhead term does not benefits from parallel execution, so it is considered as a constant.

The overhead term sometimes becomes significant when it is growing with the growth of number of processors dramatically. Refer to the green line in the figure.

The overhead term does not benefits from parallel execution, so it is considered as a constant.

The overhead term sometimes becomes significant when it is growing with the growth of number of processors dramatically. Refer to the green line in the figure.

Definition Efficiency is defined as:

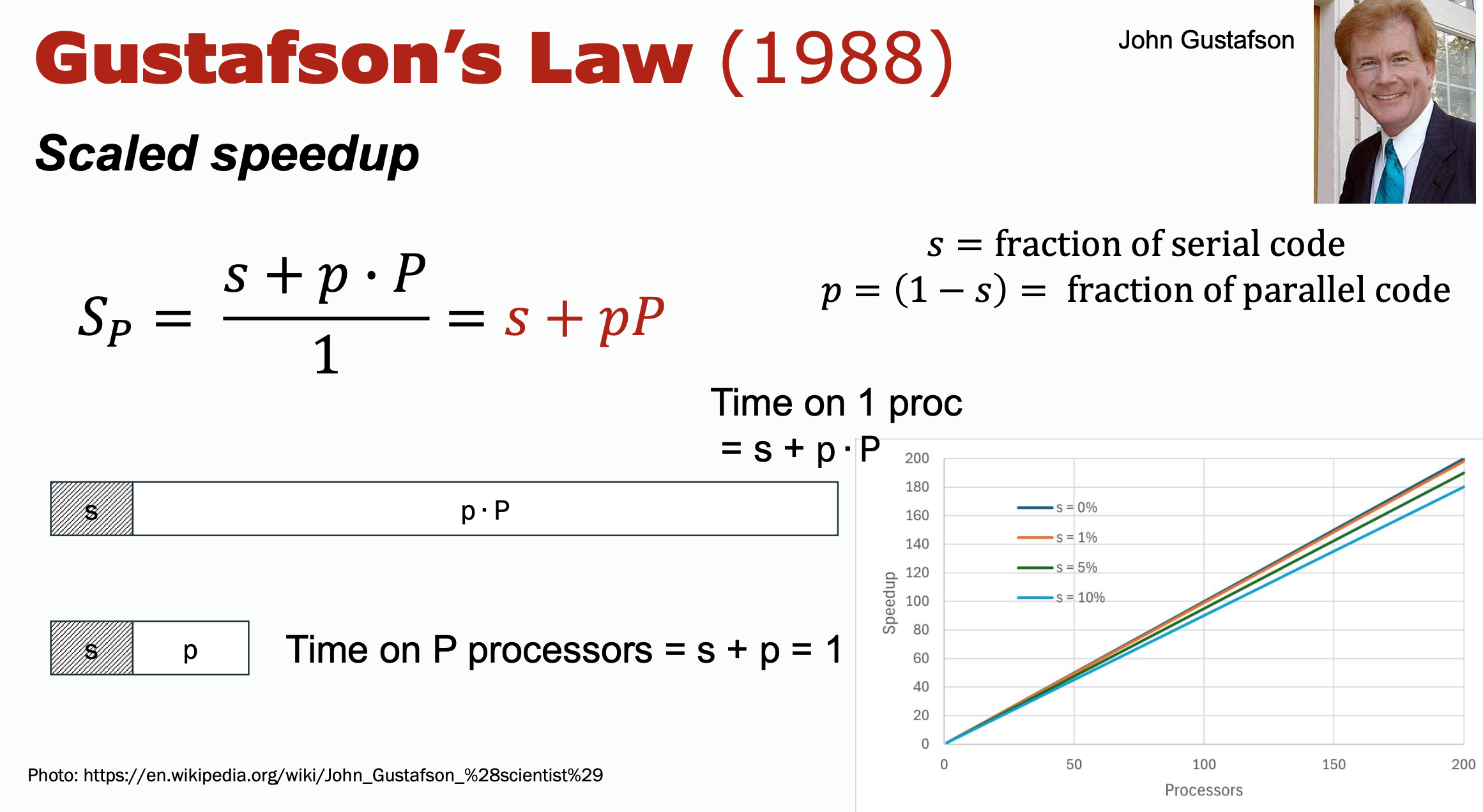

However, considering efficiency , , which means that adding more processors brings the consequence of dropping efficiency, you are wasting processors and the efficiency is very lower. But the truth is, as increases, increase too, and the fraction of time does not necessarily shrink with increasing , and efficiency remains reasonable.

Gustafson's Law (scaled speedup)

Another law that measures speedup

Amdahl’s Law is pessimistic, focusing on the inherent limitations due to the non-parallelizable portion of the workload. Gustafson’s Law is more optimistic, suggesting that increasing the problem size with more processors allows for greater speedup. Amdahl’s Law is more applicable when the problem size is fixed. Gustafson’s Law applies better when the problem size can scale with the number of processors.

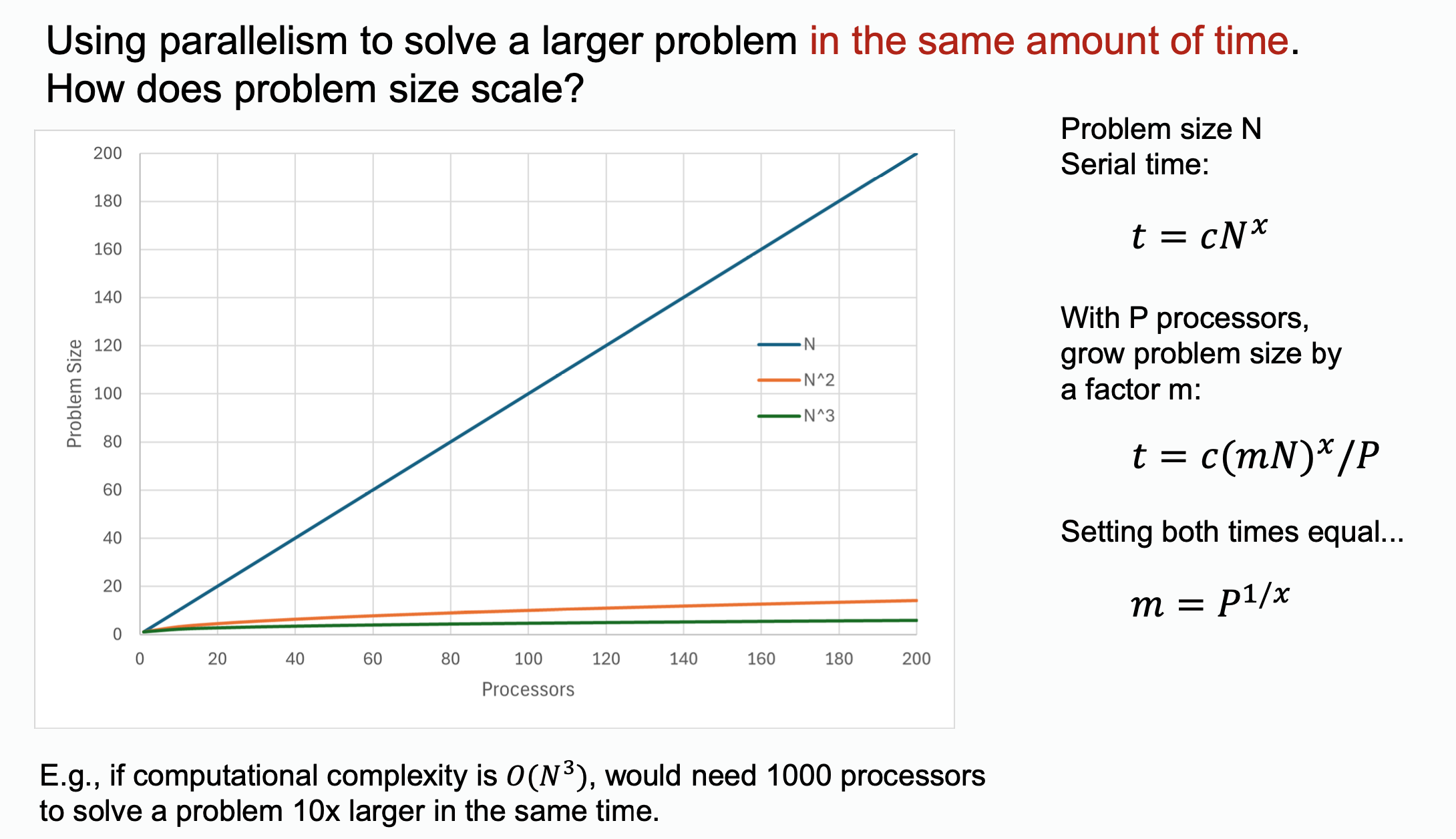

How much bigger can the problem scale that it takes same time for a specific number of processors to solve the problem?