Data Dependency

Data Dependences

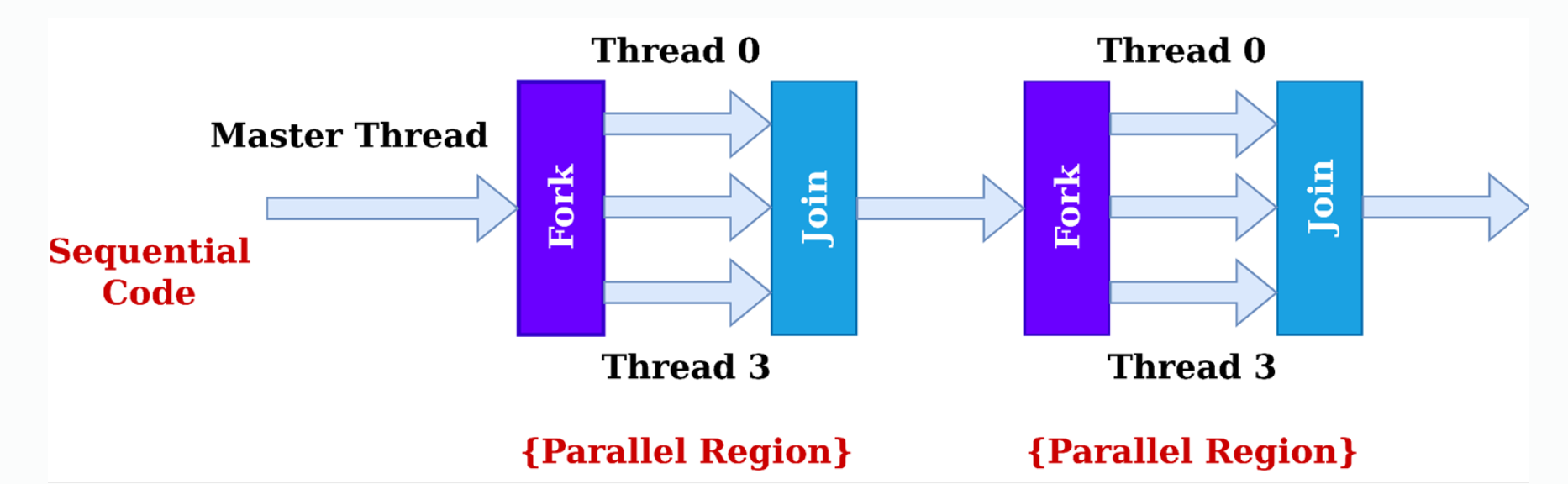

concurrent != parallel control parallel != data parallel

speed up limitations:

- dependencies

- communication overheads

- synchronization

dependencies that prevent parallel execution:

- resource dependency: wash multiple clothes with single watching machine measure parallelism: Flynn's Taxonomy

Definition: Data Dependency Assuming statement and , depends on if:

where

- is the set of memory locations read by

- is the set of memory locations written by

Three cases exist:

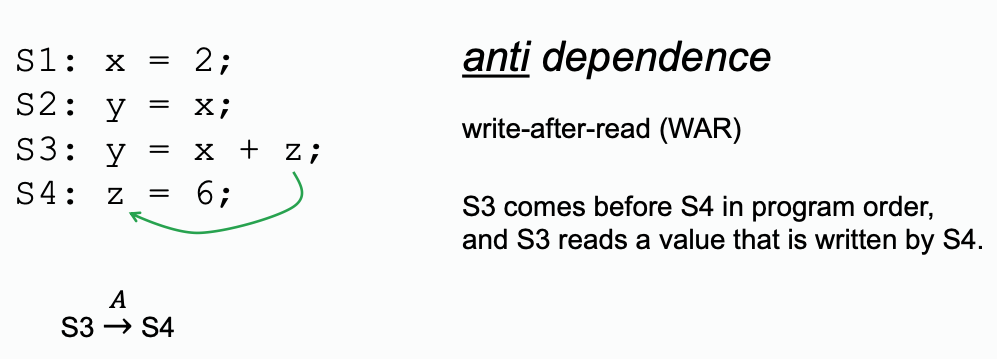

- Anti-dependency (Write After Read): and reads before overwrites it. Written as .

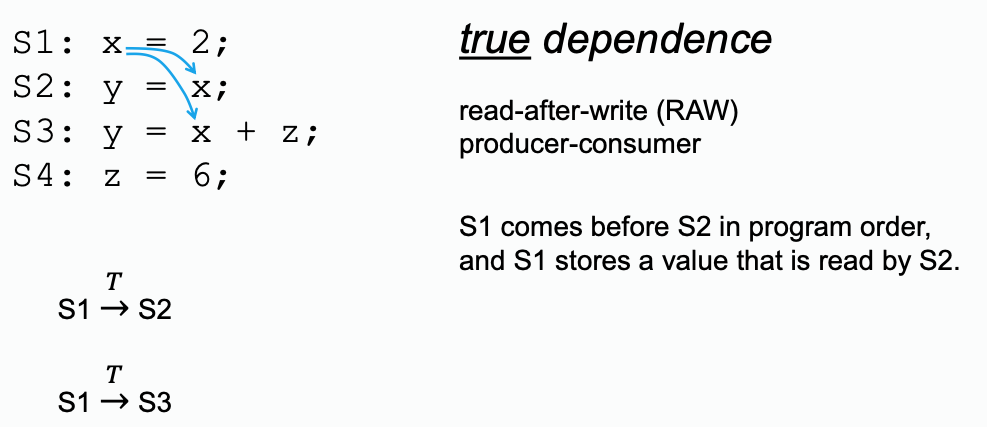

- True dependency (Read After Write): and writes before something read by . Written as

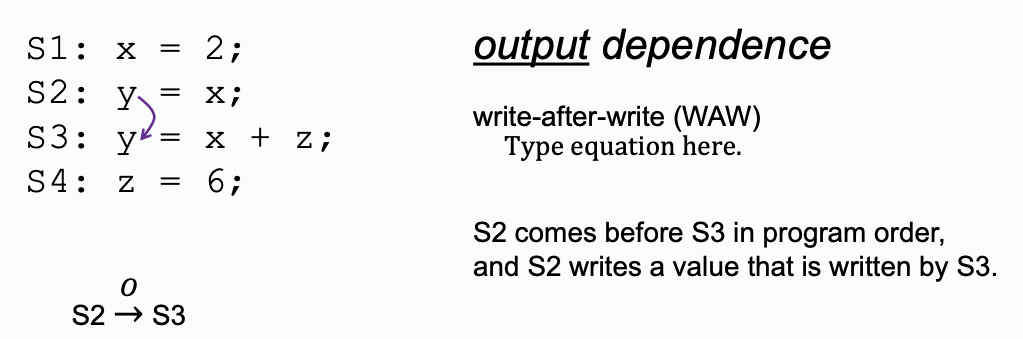

- Output dependency (Write After Write): and both write to the same memory location. Written as

There will be no concurrency problems for read after read, only with write the problem is to be addressed.

Example: True Dependency

Example: Anti Dependency

Example: Output Dependency

True dependence is the case that we most worry about.

- For anti-dependence

- For true dependence:

- For output dependence: None

Loop-carried Dependences

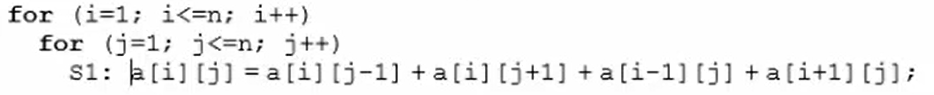

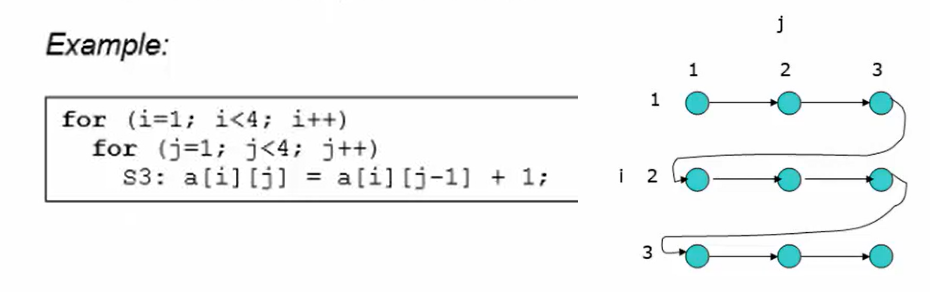

What is Iteration-space Traversal Graph (ITG)? The ITG shows graphically the order of traversal in the iteration space. This is sometimes called the happens-before relationship. In an ITG:

- A node represents a point in the iteration space

- A directed edge indicates the next-point that will be encountered after the current point is traversed

In example above:

In example above:

- It is loop-carried on

for jloop - There is no loop-carried dependence in

for iloop

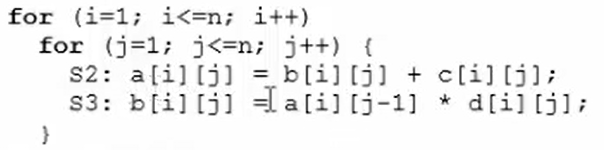

- For anti-dependence:

- For true dependence:

- For output dependence: None

Draw the LDG:

LDG is useful because it shows how can you parallel the code, for the above example, applying parallelism along axis(modifying only in each thread) is possible according to the LDG.

[!TIP] Why is the anti-dependence not shown on the graph? LDG does not show loop-independent dependences, because a node represents all statements in a particular iteration,

Identifying things that can be written in parallel code with LDG is machine independent, which means that we do not care about memory access patterns, and machine dependent factors.

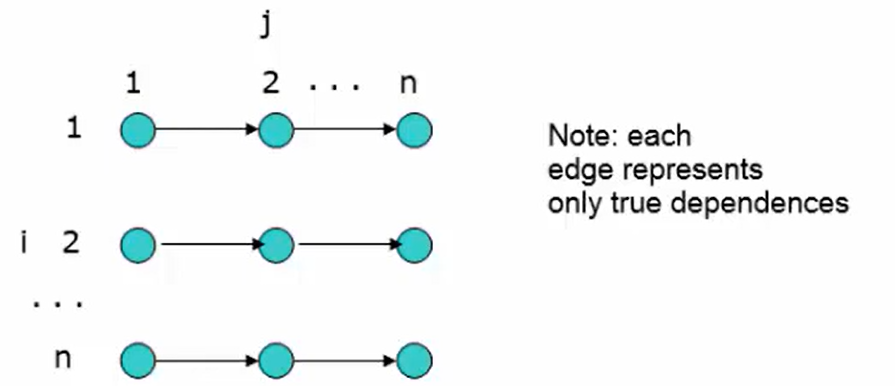

OpenMP

#pragma omp parallel for

for(int i=1; i<n; i++)

for(int j=1; j<N, j++)

a[i][j] = a[i][j-1] + 1;